3. Getting Started with Reactis for Simulink#

This chapter provides a quick overview of Reactis for Simulink. It contains a brief description of the main components of the tool suite in the form of an extended guided-tour of the different features of the tool suite. The tour uses as a running example a Simulink / Stateflow model of an automobile adaptive cruise control which is included in the Reactis distribution.

3.1. A Note on Model Preparation#

The adaptive cruise control example included with the distribution does not require any special processing before you run Reactis on it. You can load the model and start the guided tour. However, there is an important step that you should undertake when you are preparing models for use with Reactis. This section describes this preparatory step and discusses the Simulink operations needed to perform it.

Reactis supports a large portion of the discrete-time subset of Simulink and Stateflow. As it imports a Simulink / Stateflow model for testing, it interacts with MATLAB in order to evaluate MATLAB expressions used to initialize the model, including references to workspace data items used by the model.

In order for Reactis to process a model, Reactis must be able to automatically create the MATLAB base workspace data used by the model. For this reason, any base workspace data items that a model uses must be initialized within one of the following locations (for more details see Workspace Data Items):

Any Simulink model callback or block callback that is executed when loading or running the model (PreLoadFcn, PostLoadFcn, InitFcn, StartFcn).

A

startup.mfile located in the folder where the model file is located.The Callbacks pane of the Reactis Info File Editor.

Note that variables in the model workspace are stored in the .slx file for a model, as is a connection to any Simulink Data Dictionary used by the model, therefore Reactis can automatically process variables from the model workspace or a Simulink Data Dictionary.

Furthermore, the V2025.2 version of Reactis added support for MATLAB Projects (previously called Simulink Projects) so the following steps are not necessary when you are working with a model within a MATLAB project that initializes the base workspace.

Below we describe how the workspace initialization was established in the

adaptive cruise control model file included in the Reactis distribution. In

that example the MATLAB file adaptive_cruise_data.m defines some

workspace variables that are used in the Simulink model file

adaptive_cruise.slx. The adaptive_cruise_data.m file was attached to

adaptive_cruise.slx using the following steps.

Load

adaptive_cruise.slxinto Simulink.In the Simulink window, in the toolbar, click the down arrow below the Model Settings gear

.

In the resulting menu select Model Properties[1].

.

In the resulting menu select Model Properties[1].In the resulting dialog, select the Callbacks tab.

In the PreLoadFcn section, enter

adaptive_cruise_data;(note that the.msuffix is not included).Save the model.

This saved adaptive_cruise.slx file is distributed with Reactis, so you

do not need to undertake the above steps yourself in order to load and

process this file in Reactis. The above steps are cited only for

illustrative purposes.

3.2. Reactis Top Level#

The Reactis top-level window contains menus and a tool bar for launching and controlling verification and validation activities. Reactis is invoked as follows.

Step 1

Select Reactis V2025.2 from the Windows Start menu or double-click on the desktop shortcut if you installed one.

You now see a Reactis window like the one shown in Figure 3.1.

Fig. 3.1 The Reactis window.#

A model may be selected for analysis as follows.

Step 2

Click the  (file open) button located at the leftmost position on the tool bar. Then

use the resulting file-selection dialog to choose the file

(file open) button located at the leftmost position on the tool bar. Then

use the resulting file-selection dialog to choose the file adaptive_cruise.slx

from the examples folder of the Reactis distribution.[2]

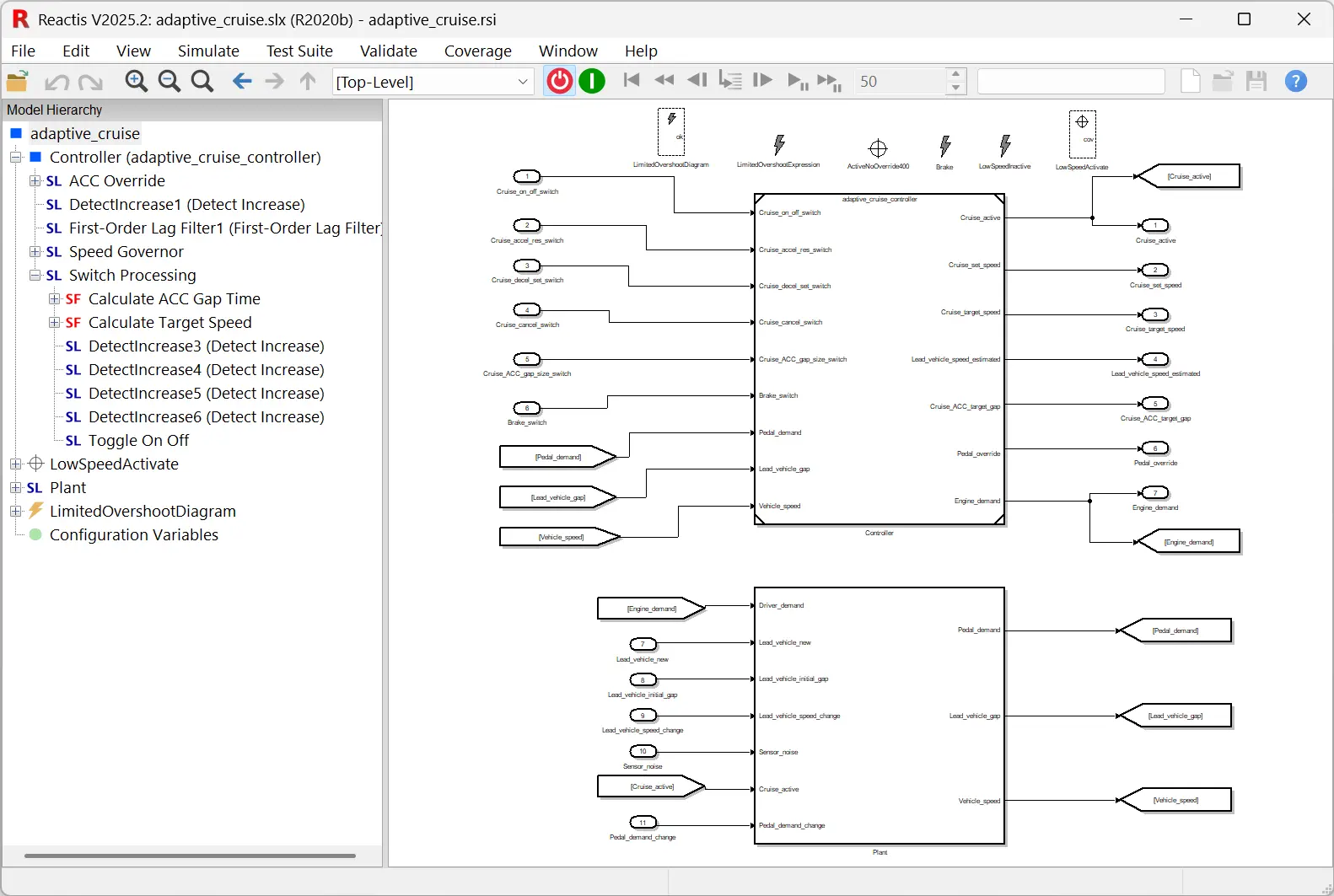

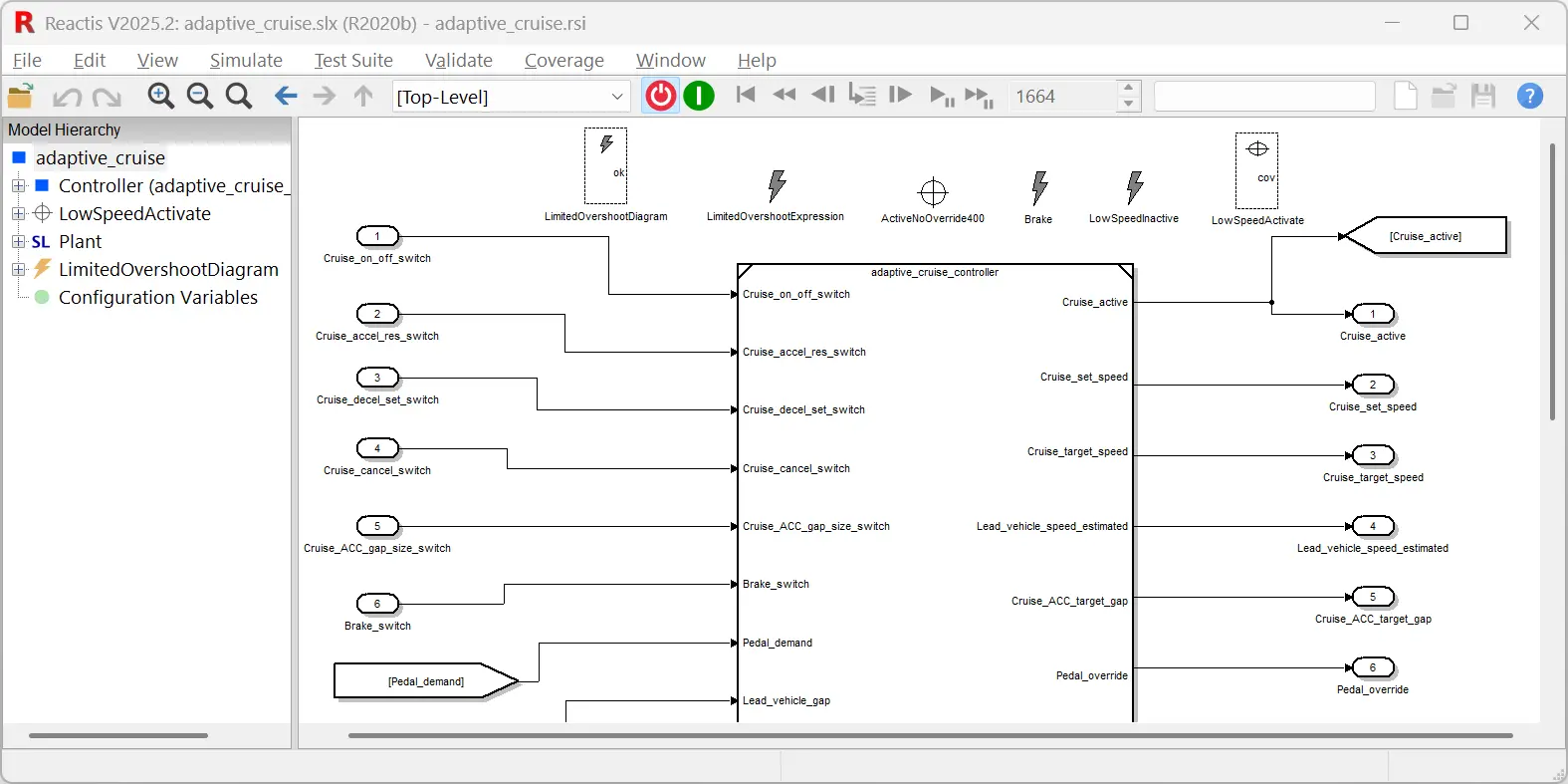

Loading the model causes the top-level window to change as shown in

Figure 3.2. The panel to the right shows the

top-level Simulink diagram of the model. The panel to the left shows the

model hierarchy. In addition, the title bar now reports the model currently

loaded, namely adaptive_cruise.slx, and the Reactis info file (.rsi

file) adaptive_cruise.rsi that contains testing information maintained by

Reactis for the model. .rsi files are explained in more detail below in

Info File Editor.

It is also worth noting that if during installation you chose to associate

the .rsi file extension with Reactis, then you can start Reactis and open

adaptive_cruise.slx in a single step by double-clicking on

adaptive_cruise.rsi in Windows Explorer.

Fig. 3.2 Reactis after loading adaptive_cruise.slx.#

Subsystems in the hierarchy panel are tagged with icons indicating whether

they are Simulink (SL),

Stateflow (SF),

Embedded MATLAB (ML)[3],

C source files (C),

C libraries (LIB),

S-Functions (FN)[4],

assertions (![]() ), or user-defined targets

(

), or user-defined targets

(![]() ).

).

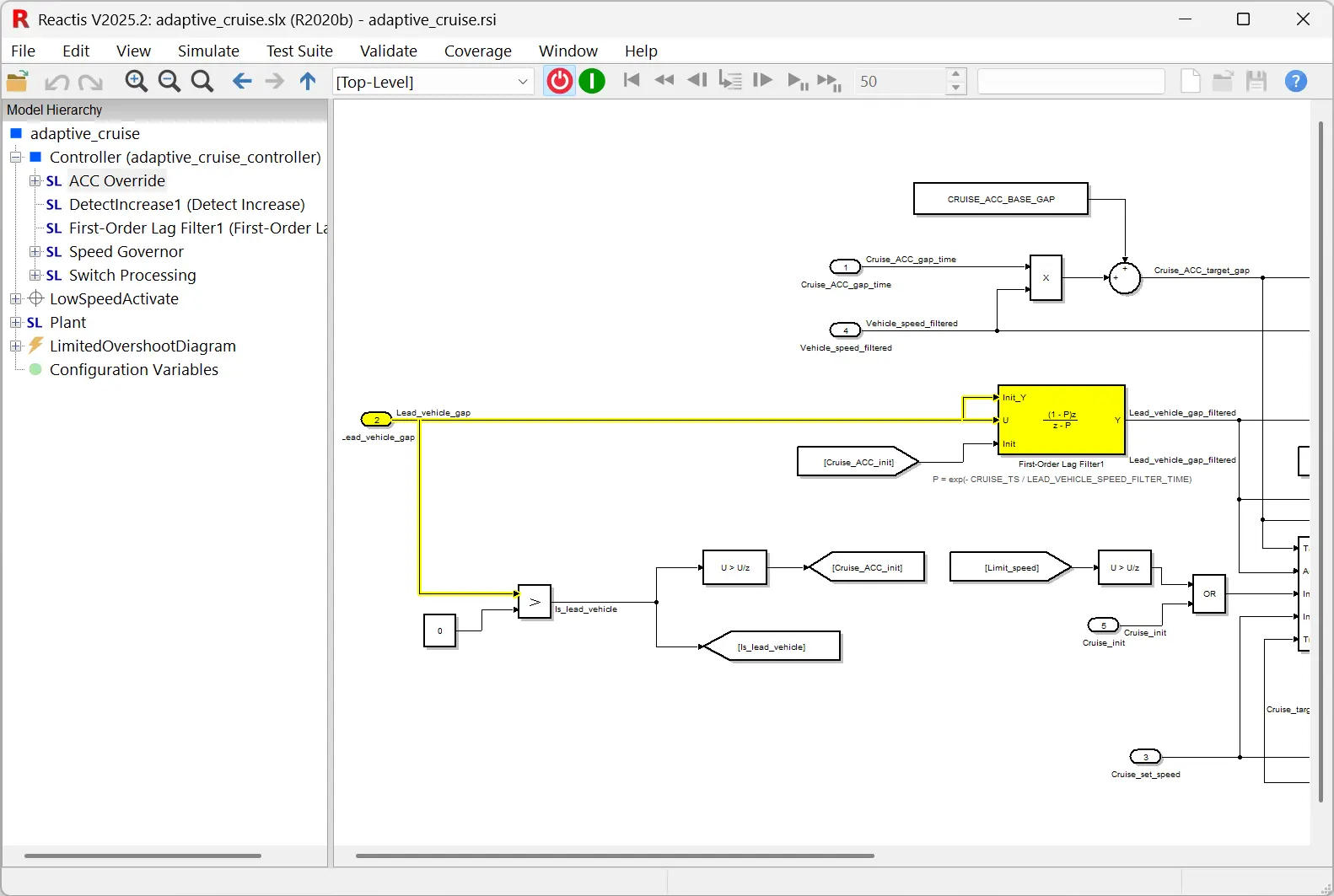

Reactis provides a signal-tracing mechanism which allows the path taken by a signal to be quickly identified. To trace a signal, left-click on any part of the signal line.

Step 3

In the model hierarchy panel, click on the plus (

) to the left of the

) to the left of the Controllerreferenced model to expand the hierarchy to display its subsystems.In the hierarchy panel, click on

ACC Override. This causes theACC Overridesubsystem to be displayed in the main panel.Place the mouse cursor in the main panel, then hold down the Control key and scroll with the mouse wheel to zoom in a bit.

In the main panel, left-click on the on the signal line emerging from the

Lead_vehicle_gapinport (inport 2). The result should be similar to Figure 3.3.Click on the

button twice to see how to

trace the signal through different levels of the model hierarchy.

button twice to see how to

trace the signal through different levels of the model hierarchy.

As shown in Figure 3.3, signals are highlighted in yellow when left-clicked on. The route of the highlighted signal can then be easily identified. To turn off the highlighting, left-click on empty space in the main window.

Fig. 3.3 Highlighting a signal.#

3.3. The Info File Editor#

Reactis does not modify the .slx file for a model. Instead the tool

stores model-specific information that it requires in an .rsi file. The

primary way for you to view and edit the data in these files is via the

Reactis Info File Editor, which is described briefly in this section and in

more detail in the Reactis Info File Editor

chapter. You may also manipulate .rsi files using the Reactis

API.

The next stop in the guided tour explains how this editor may be invoked on

adaptive_cruise.rsi.

Step 4

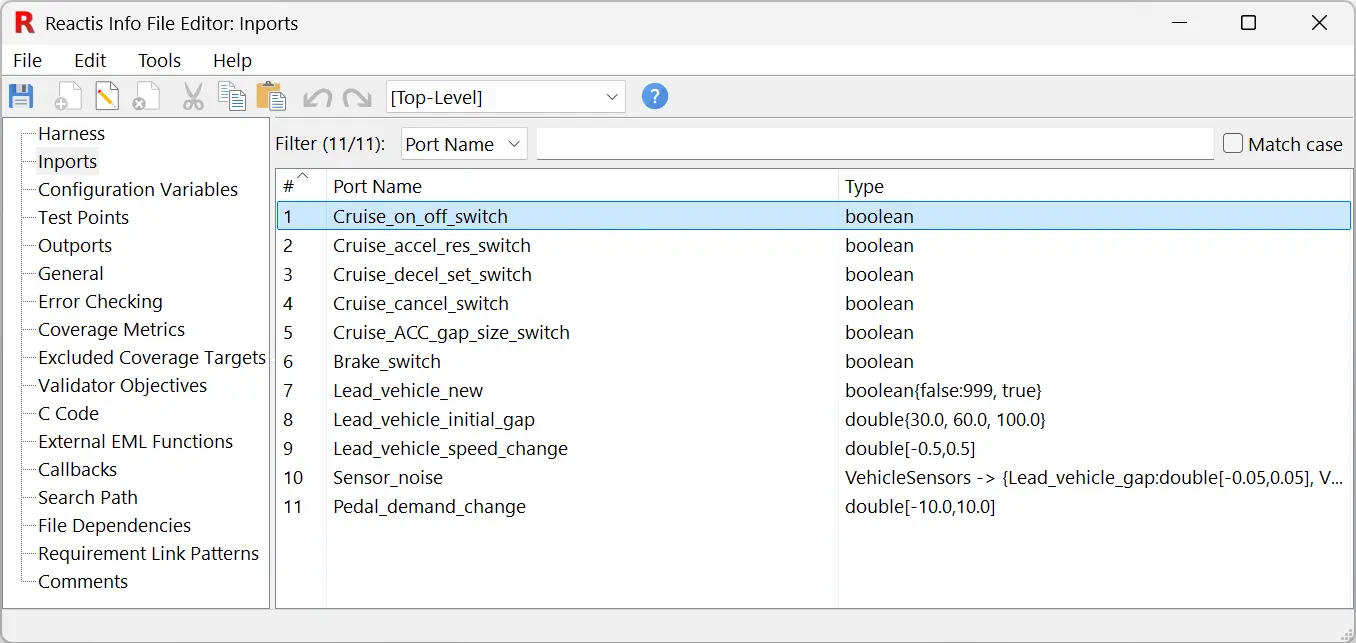

Select the Edit > Inports… menu item.

This starts the Reactis Info File Editor, as shown in

Figure 3.4. Note that the contents of the .rsi

file may only be modified when Simulator is disabled. When Simulator is

running, the Info File Editor operates in read-only mode as indicated by

“[read only]” in the editor window’s title bar.

Fig. 3.4 The Reactis Info File Editor.#

.rsi files contain directives partitioned among the following panes in

the Info File Editor.

- Harness.

A harness specifies how a model is tested. The default harness [Top-Level] is for testing the full model. A harness can be created for any subsystem in a model by right-clicking on the subsystem and selecting Create New Harness…. Learn more in Test Harness Pane.

- Inports.

A type for each harness inport is used by Reactis to constrain the set of values fed into harness inports during simulation, test-case generation, and model validation. For example, you can specify an inport range by giving minimum and maximum values. Learn more in Inports pane.

- Configuration Variables.

Configuration variables are user-selected variables from the base workspace, model workspace, or a data dictionary which can change in between tests in a test suite (but not during a test). Learn more in Configuration Variables Pane.

- Test Points.

Test points are internal signals which have been selected (by the user) to be logged in test suites. Learn more in Test Points Pane.

- Outports.

Each outport of a harness may be assigned a tolerance and set of targeted intervals. When executing a test suite on a model in Reactis Simulator, the tolerance specifies the maximum acceptable difference between the value computed by the model for the outport and the value stored in a test suite for the outport.

For each interval assigned to the outport, Interval Coverage will track whether the output has been assigned a value from each interval during testing. The automatically managed interval

~y0tracks whether the output has had a value different from its initial value. Each row of the Outports pane lists the name, tolerance, and interval targets for a specific outport. Double-clicking on a row in the pane opens a dialog that lets you modify the tolerance or intervals for the outport.Learn more in Outports Pane.

- General.

Various settings that determine how a model executes in Reactis. Learn more in General Pane.

- Error Checking.

Specify how Reactis should respond to various types of errors (e.g. overflow, NaN, etc.). Learn more in Error Checking Pane.

- Coverage Metrics.

Specify which coverage metrics will be tracked for the model and adjust the configurable metrics (CSEPT, MC/DC, MCC, Boundary, Interval). Learn more in Coverage Metrics Pane.

- Excluded Coverage Targets.

Individual targets to be ignored when measuring the coverage of a model. Learn more in Excluded Coverage Targets Pane.

- Validator Objectives.

Validator objectives (including assertions, user-defined targets, and virtual sources) are instrumentation that you may provide to check if a model meets its requirements. You can double-click on an item in this list to highlight the location of the objective in the model. Learn more in Validator Objectives Pane.

- C Code.

If the Reactis for C Plugin is installed, this pane will contain information about C-coded S-functions, C code embedded within Stateflow charts and C library code called from either an S-function or Stateflow. Learn more in Reactis for C Plugin.

- External EML Functions.

If the Reactis for EML Plugin is installed, this pane let’s you specify any external Embedded MATLAB functions used by your model (i.e. functions stored in .m files outside your model). Learn more in Reactis for EML Plugin.

- Callbacks.

These are fragments of MATLAB code that Reactis will run before or after loading a model in Simulink. Note, that these callbacks are distinct from those maintained by Simulink.

- Search Path.

The model-specific search path is prepended to the global search path to specify the list of folders (for the current model) in which Reactis will search for files such as Simulink model libraries (.slx), MATLAB scripts (.m), and S-Functions (.dll, .mexw32, .m). Learn more in Search Path Pane.

- File Dependencies.

A list of files on which the current model depends. The file-dependency information enables Reactis to track changes in auxiliary model files so that information obtained from them for the purposes of processing the current model may be kept up to date. Typically, files listed here would include any

.mfiles loaded as a result of executing the current model’s pre-load function. For example, a.mfile mentioned in the pre-load function might itself load another.mfile; this second.mfile (along with the first) should be listed as a dependency in order to ensure that Reactis behaves properly should this.mfile change. Dependencies on libraries (.slx files) are detected automatically and need not be listed here. Learn more in File Dependencies Pane.- Requirement Link Patterns

A list of patterns that can be used to link Validator objectives to requirements stored in an external tool.

- Comments

A list of informational user annotations of the model.

Step 5

To modify the type for an inport, select the appropriate row, then right-click on it and select Edit…, and make the desired change in the resulting dialog. Save the change and close the type editor by clicking Ok or close without saving by clicking Cancel.

The types that may be specified are the base Simulink / Stateflow types extended with notations to define ranges, subsets and resolutions, and to constrain the allowable changes in value from one simulation step to the next. More precisely, acceptable types can have the following basic forms.

- Complete range of base type:

By default the type associated with an inport is the Simulink / Stateflow base type inferred from the model. Allowed base types include:

int8,int16,int32,uint8,uint16,uint32,boolean,single,double,sfix*, andufix*.- Subrange of base type:

bt[i, j], where bt is a Simulink / Stateflow base type, and i and j are elements of type bt, with i being a lower bound and j an upper bound.

- Subrange with resolution type:

bt [i:j:k], where bt is a

double,single, or integer type, i is a lower bound, j is a resolution, and k is an upper bound; all of i, j and k must be of type bt. The allowed values that an inport of this type may have are of form i + n × j , where n is a non-negative integer such that i + n × j ≤ k. In other words, each value that an inport of this type may assume must fall between i and k, inclusive, and differ from i by some integer multiple of j.- Set of specific values:

bt{ e1, … en }, where bt is a base type and e1, … en are either elements of type bt, or expressions of form v:w, where v is an element of type bt and w, a positive integer, is a probability weight. These weights may be used to influence the relative likelihood that Reactis will select a particular value when it needs to select a value randomly to assign to an inport having an subset type. If the probability weight is omitted it is assumed to be 1. For example, an inport having type

uint{0:1, 1:3}would get the value 0 in 25% of random simulation steps and the value 1 in 75% of random simulation steps, on average.- Delta type:

tp

delta[i,j], where tp is either a base type, a range type, or a resolution type, and i and j are elements of the underlying base type of tp. Delta types allow bounds to be placed on the changes in value that variables may undergo from one simulation step to the next. The value i specifies a lower bound, and j specifies an upper bound, on the size of this change between any two simulation steps. More precisely, if a variable has this type, and v and v’ are values assumed by this variable in successive simulation steps, with v’ the later value, then the following mathematical relationship holds: i ≤ v’ - v ≤ j. Note that if i is negative then values are allowed to decrease as simulation progresses.- Conditional type:

ifexprthentp1elsetp2, where expr is a boolean expression and tp1 and tp2 are type constraints. If expr is true then the input assumes a value specified by tp1 otherwise it assumes a value specified by tp2. Currently, the only variable allowed in expr is the simulation time expressed ast.

Table 3.1 gives examples of types and the values they contain. For vector, matrix or bus inports, the above types can be specified for each element independently.

RSI Type |

Values in Type |

|---|---|

|

All double-precision floating-point numbers between 0.0 and 4.0, inclusive. |

|

-1, 0, 1 |

|

0, 4, 7 |

|

0, 1 |

|

0.0, 0.5, 1.0, 1.5, 2.0 |

|

0, 1, 2, 3; inports of this type can increase or decrease by at most 1 in successive simulation steps. |

|

At simulation time zero, input has value 0.0; subsequently, input is between 0.0 and 10.0. |

If any change is made to a port type, then “[modified]” appears in the title bars of the Info File Editor and the top-level Reactis window. You may save changes to disk by selecting File > Save from the Info File Editor or File > Save Info File from the top-level window.

If no .rsi file exists for a model, Reactis will create a default file

the first time you open the Info File Editor, or start Simulator or

Tester. The default type for each inport is the base type inferred for the

port from the model. If you add or remove an inport to your model you can

synchronize your .rsi file with the new version of the model by selecting

Tools > Synchronize Inports, Outports, Test Points from the Info File

Editor.

Step 6

Select the File > Exit menu item on the editor’s tool bar to close the Reactis Info File Editor.

3.4. Simulator#

Reactis Simulator provides an array of facilities for viewing the execution of models. To continue with the guided tour:

Step 7

Click the  (enable Simulator) button in the tool bar

to start Reactis Simulator.

(enable Simulator) button in the tool bar

to start Reactis Simulator.

This causes Reactis to begin importing the model into Reactis. The status of the import can be tracked in the bottom left corner of the Reactis window. When the import completes, a number of the tool-bar buttons that were previously disabled become enabled.

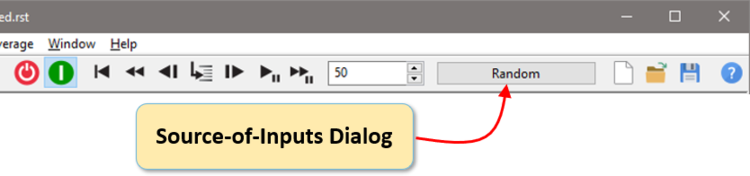

Simulator performs simulations in a step-by-step manner: at each simulation step inputs are generated for each harness inport, and resultant outputs reported on each outport. You can control how Simulator computes harness inport values using the Source-of-Inputs dialog as follows. Simulator may be directed to:

generate values randomly (this is the default),

query the user,

read inputs from a Reactis test suite. Such tests may have been generated automatically by Reactis Tester, constructed manually in Reactis Simulator, or imported from a file storing test data in a comma-separated-value format.

To set where inputs come from, use the Source-of-Inputs dialog

located to the left of  in the tool bar (see

Figure 3.5). The next part of the guided

tour illustrates the use of each of these input sources and describes

coverage tracking, data-value tracking, and other features of Simulator.

in the tool bar (see

Figure 3.5). The next part of the guided

tour illustrates the use of each of these input sources and describes

coverage tracking, data-value tracking, and other features of Simulator.

Fig. 3.5 The Source-of-Inputs dialog allows you to control how Reactis Simulator generates values for harness inports when executing a model.#

3.4.1. Generating Random Inputs#

As the random input source is the default, no action needs to be taken to set this input mode.

Step 8

In the model hierarchy panel on the left of the Simulator window, click on

adaptive_cruise/Controller/Switch Processing/Calculate Target Speed in

order to display the Stateflow diagram in the main window. Then, click the

(Run/Pause Simulation) button.

(Run/Pause Simulation) button.

During simulation, states and transitions in the diagram are highlighted in

green as they are entered and executed. The simulation stops automatically

when the number of simulation steps reaches the figure contained in the

entry box to the left of the Source-of-Inputs dialog. Before then, you may

pause the simulation by clicking the  (Run/Pause

Simulation) button a second time. Note, that simulation will likely pause

in the middle of a simulation step. You may then click

(Run/Pause

Simulation) button a second time. Note, that simulation will likely pause

in the middle of a simulation step. You may then click

to continue executing a block at a time, or

to continue executing a block at a time, or

to complete the step, or

to complete the step, or  to

back up to the start of the step. The fast simulation button

to

back up to the start of the step. The fast simulation button

works like

works like  , but without

animation, so simulation is much faster.

, but without

animation, so simulation is much faster.

3.4.2. Tracking Model Coverage#

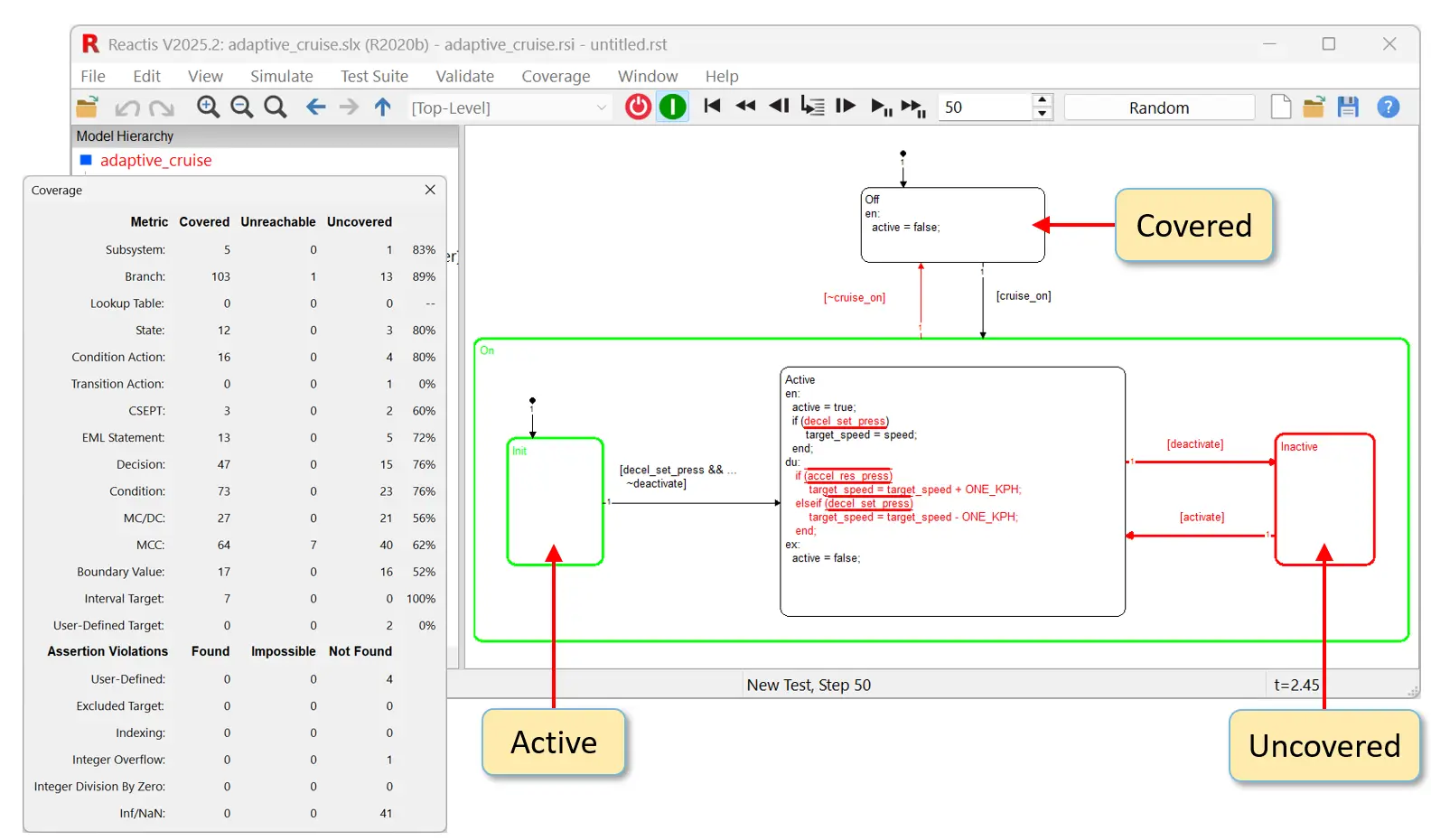

While Simulator is running you may also track coverage information regarding which parts of your model have been executed and which have not. These coverage-tracking features work for all input-source modes. The next portion of the guided tour illustrates how these features are used.

Step 9

Make sure menu item Coverage > Show Details is selected (as indicated by a check mark in the menu). Select menu item Coverage > Show Summary.

Fig. 3.6 The Reactis coverage tracking features convey which parts of a model have been exercised. Note that the coverage information you see at this point will be different than that shown in the figure. This is because a different sequence of inputs (from the random ones you used) brought the simulation to the displayed state.#

A dialog summarizing coverage of the different metrics tracked by Reactis

now appears. In the main panel, elements of the diagram not yet exercised

are drawn in red, as shown in Figure 3.6. Note

that poor coverage is not uncommon with random simulation. We’ll soon see

how to get better coverage using Reactis Tester. You may hover over a

covered element to determine the (1) test (in the case being considered

here, the “test” is the current simulation run and is rendered as . in

the hovering information) and (2) step within the test that first exercised

the item. This type of test and step coverage information is displayed with

a message of the form test/step. You may view detailed information for

the decision, condition, and MC/DC coverage metrics, which involve Simulink

logic blocks and Stateflow transition segments whose label includes an

event and/or condition, as follows.

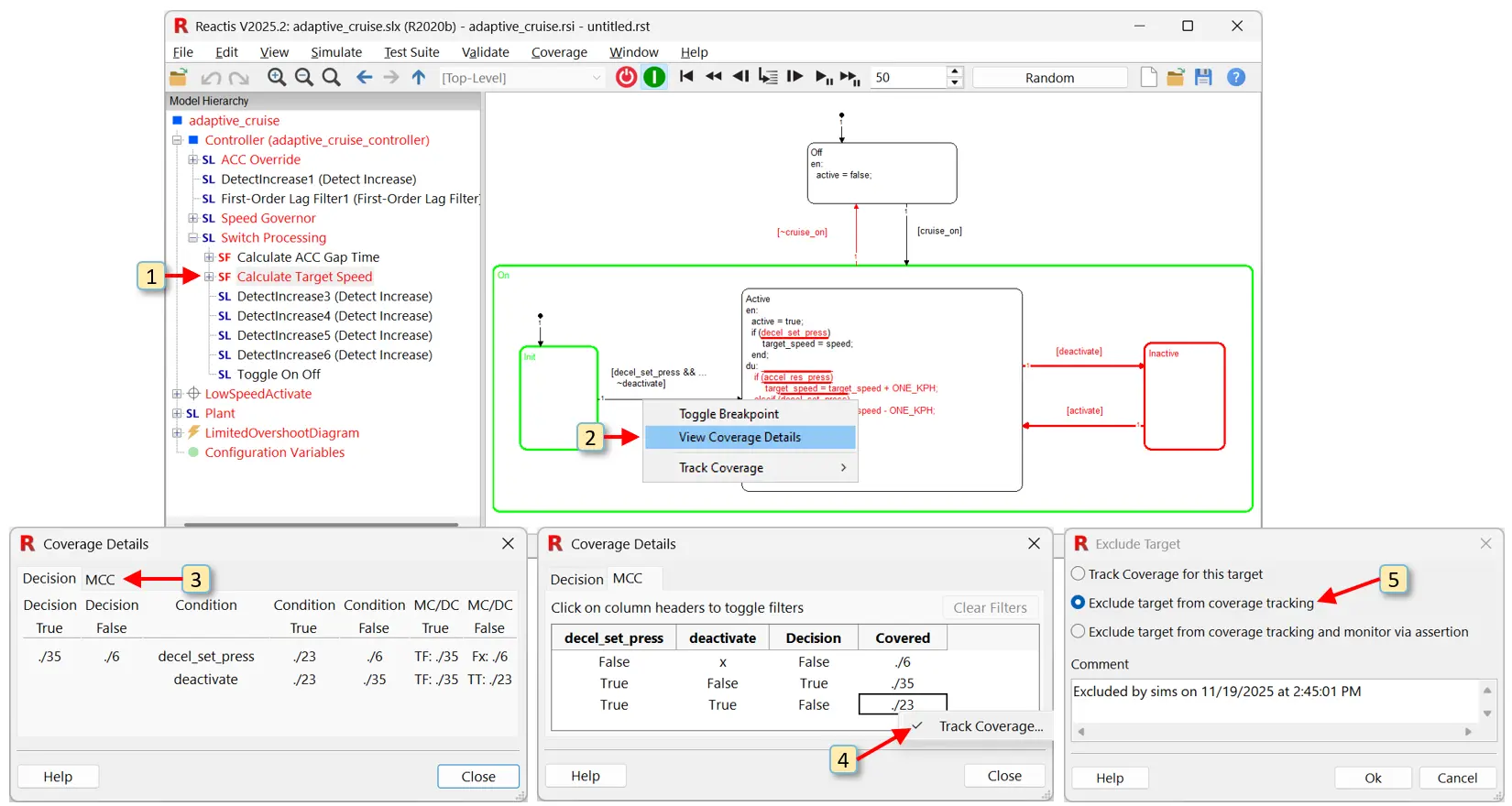

Step 10

Steps 1 and 2 below show how to open the Coverage Details dialog for a decision on a Stateflow transition. Steps 3 through 6 demonstrate how to exclude an individual coverage target from being tracked.

In the Model Hierarchy panel on the left of the Reactis window, click on

adaptive_cruise/Controller/Switch Processing/Calculate Target Speedto display the Stateflow diagram in the main panel. Note, this was done in a previous step, so it may not be necessary here.Right-click on the transition from state Init to state Active, and select View Coverage Details. A Coverage Details dialog will appear.

In the Coverage Details dialog, select the MCC tab to display and inspect information related to multiple condition coverage.

Right-click on the bottom cell in the Covered column of the MCC table and then select Track Coverage. An Exclude Target dialog will appear.

In the Exclude Target dialog, select Exclude this target from coverage and click on Ok to close the dialog. The status of the MCC target will change to excluded.

Right-click on the bottom cell in the Coverage column of the MCC table and open the Exclude Target dialog a second time. Select Track coverage for this target and click on Ok to close the dialog. The status of the MCC target will change back to its original value.

Fig. 3.7 The Coverage Details dialog includes two tabs: Decision and MCC. The Decision tab includes details of decision coverage, condition coverage, and MC/DC. The MCC tab shows multiple condition coverage.#

The Coverage Details dialog, shown in Figure 3.7, has two tabs: Decision and MCC. The

Decision tab displays details for decision coverage, condition

coverage, and MC/DC. The table in this figure gives information for the

decision: decel_set_press & ~deactivate This decision contains two

conditions: decel_set_press and deactivate.

Conditions are the atomic boolean expressions that are used in decisions. The first two columns of the table list the test/step information for when the decision first evaluated to true and when it first evaluated to false. A value -/- indicates that the target has not yet been covered. The third column lists the conditions that make up the decision, while the forth and fifth columns give test/step information for when each condition was evaluated to true and to false.

MC/DC Coverage requires that each condition independently affect the

outcome of the decision in which it resides. When a condition has met the

MC/DC criterion in a set of tests, the sixth and seventh columns of the

table explain how. Each element of these two columns has the form

b1 b2 … bn:test/step, where

bi reports the outcome of evaluating condition i in the

decision (as counted from left to right in the decision or top to bottom in

column three) during the test and step specified. Each bi is

either T to indicate the condition evaluated to true, F to indicate the

condition evaluated to false, or x to mean the condition was not

evaluated due to short circuiting.

The MCC tab of the Coverage Details dialog displays details of multiple condition coverage (MCC) which tracks whether all possible combinations of condition outcomes for a decision have been exercised. The table includes a column for each condition in the decision. The column header is the condition and each subsequent row contains an outcome for the condition: True, False, or x (x indicates the condition was not evaluated due to short-circuiting). Each row also contains the outcome of the decision (True of False) and, when covered, the test and step during which the combination was first exercised.

It should be noted that the previous scenario relied on randomly generated input data, and replaying the steps outlined above will yield different coverage information than that depicted in Figure 3.7

An alternative way to query coverage information is to invoke the Coverage-Report Browser by selecting Coverage > Show Report. This is a tool for viewing or exporting coverage information that explains which model elements have been covered along with the test and step where they were first exercised. Yet another way to inspect test coverage is with Simulate > Fast Run With Report…. In addition to coverage data, these reports can contain other types of information such as plots showing expected outputs compared to actual outputs.

A simulation run, and associated coverage statistics, may be reset by

clicking the Reset to First Step button ( )

in the tool bar.

)

in the tool bar.

3.4.3. Reading Inputs from Tests#

Simulation inputs may also be drawn from tests in a Reactis test

suite. Such a test suite may be generated automatically by Reactis Tester,

constructed manually in Reactis Simulator, or imported from a file storing

test-data in a comma-separated-value format. By convention, files storing

Reactis test suites have a .rst filename extension. A Reactis test suite

may be loaded into Simulator as follows.

Step 11

Click the  button in the tool bar to the right of

the Source-of-Inputs dialog and use the file-selection dialog to select

button in the tool bar to the right of

the Source-of-Inputs dialog and use the file-selection dialog to select

adaptive_cruise.rst in the examples folder of the Reactis distribution.

This causes adaptive_cruise.rst, the name of a test suite file generated by

Reactis Tester, to appear in the title bar and the contents of the

Source-of-Inputs dialog to change; it now contains a list of tests that

have been loaded. To view this list:

Step 12

Click on the Source-of-Inputs dialog (located to the left of

in the tool bar).

in the tool bar).

Each test in the suite has a row in the dialog which contains a test number, a sequence number, a name, and the number of steps in the test. Clicking the All button in the lower left corner of the dialog specifies that all tests in the suite should be executed one after another. To execute the longest test in the suite:

Step 13

Do the following.

Select the test containing a little over 400 steps in the Source-of-Inputs dialog.

Click the Run Fast Simulation button (

).

).

If you look at the bottom-right corner of the Reactis window, you can see

that the test is being executed (or has completed), although the results of

each execution step are not displayed graphically. When the test execution

completes, the exercised parts of the model are drawn in black. If the Run

Simulation button ( ) is clicked instead, then the

results of each simulation step are rendered graphically, with the

consequence that simulation proceeds more slowly.

) is clicked instead, then the

results of each simulation step are rendered graphically, with the

consequence that simulation proceeds more slowly.

Whenever tests are executed in Simulator, the value computed by the model for each harness outport and test point is compared against the corresponding value stored in the test suite. The tolerance for this comparison can be configured in the Info File Editor. An HTML report listing any differences (as well as any runtime errors encountered) can be generated by loading a test suite in Simulator and then selecting Simulate > Fast Run With Report.

3.4.4. Tracking Values of Data Items#

When Simulator is paused[5], you may view the current value of a given data item (Simulink block or signal line, Stateflow variable, Embedded MATLAB variable, or C variable) by hovering over the item with your mouse cursor. You may also select data items whose values you wish to track during simulation using the watched-variable and scope facilities of Simulator.

Step 14

In the model hierarchy panel on the left of the Reactis window, select the

top-level of the model adaptive_cruise for display. Then:

Right-click on the outport

Cruise_activeand select Add to Watched from the resulting pop-up menu.Hover over outport

Cruise_activewith the mouse; a pop-up displays its current value.

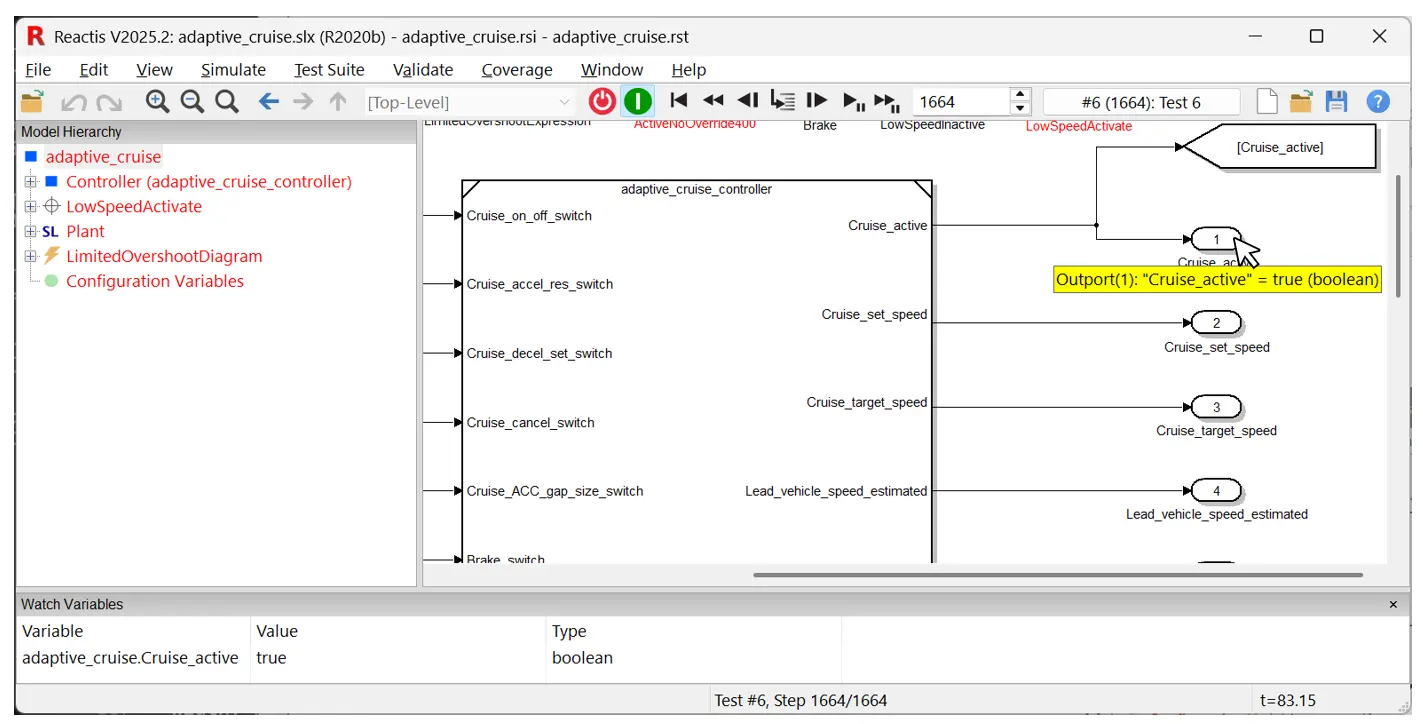

The bottom of the Simulator window now changes to that indicated in Figure 3.8.

Fig. 3.8 The watched-variable panel in Simulator displays the values of data items, as does hovering over a data item with the mouse.#

The watched-variable panel shows the values of watched data items in the current simulation step, as does hovering over a data item with the mouse. Variables may be added to, and deleted from, the watched-variable panel by selecting them and right-clicking to obtain a menu. You may also toggle whether the watched-variable list is displayed or not by selecting the View > Show Watched Variables menu item.

Scopes display the values a given data item has assumed since the beginning of the current simulation run. To open a scope:

Step 15

Perform the following.

In the model hierarchy panel, select

cruise_active(if not already selected).Right-click on inport

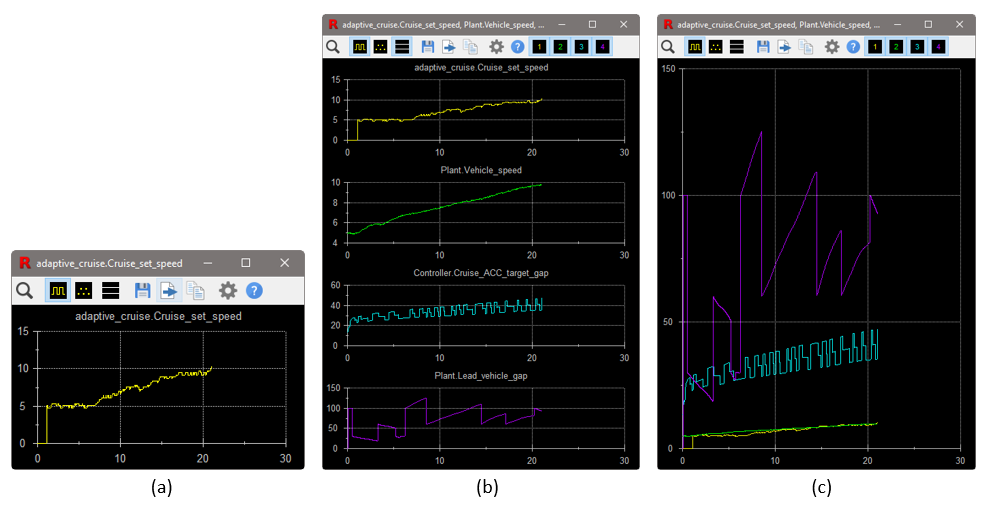

speedand select Open Scope from the resulting pop-up menu. A new window like the one in Figure 3.9 (a) appears and plots the speed of the car during the simulation run.Right-click on the

Vehicle_speedsignal that exits thePlantsubsystem and select Add to Scope > adaptive_cruise.Cruise_set_speed. This causes the vehicle speed to be added to the set speed scope previously opened. Similarly, add to the scope:the

Cruise_ACC_target_gapoutport andthe

Lead_vehicle_gapsignal exiting the plant.

The scope now appears similar to Figure 3.9 (b).

To overlay the signals to simplify comparing them, click the

button in the scope tool bar, so that the

scope now appears similar to Figure 3.9 (c).

button in the scope tool bar, so that the

scope now appears similar to Figure 3.9 (c).

Fig. 3.9 Plotting set speed (yellow), vehicle speed (green), target gap to lead vehicle (blue),

and actual gap (purple). The  button toggles whether the

signals are overlaid (c) or not (b).#

button toggles whether the

signals are overlaid (c) or not (b).#

Scopes also have a zoom feature which is particularly useful for viewing the details of long tests. To zoom in, select a region of interest by clicking in the scope and dragging to specify a region. To scroll, hold down the control key, then click in the scope and drag. See the section on Reactis Scopes for more details.

Step 16

Perform the following.

Select a region of interest within the scope by clicking and dragging while holding the mouse button down. Release the button. This zooms the scope in to view the selected area.

Select a second region of interest within the scope by clicking and dragging. This zooms the scope in a second time.

Hold down the control (Ctrl) key and click within the scope. The cursor will change to a plus sign (+). Move the mouse while holding down the mouse button. This will shift the region displayed within the scope. Release the mouse button.

Right-click on the scope. This zooms the scope out so that the region selected during step 1 is displayed.

Right-click on the scope. This zooms the scope out a second time, so that the area selected before step 1 was performed is displayed. In this case, the entire signal will be visible.

Step 17

Close the watched-variable panel by selecting the View > Show Watched Variables menu item.

3.4.5. Querying the User for Inputs#

The third way for Simulator to obtain values for inports is for you to provide them. To enter this mode of operation:

Step 18

Select User Guided Simulation from the Source-of-Inputs dialog.

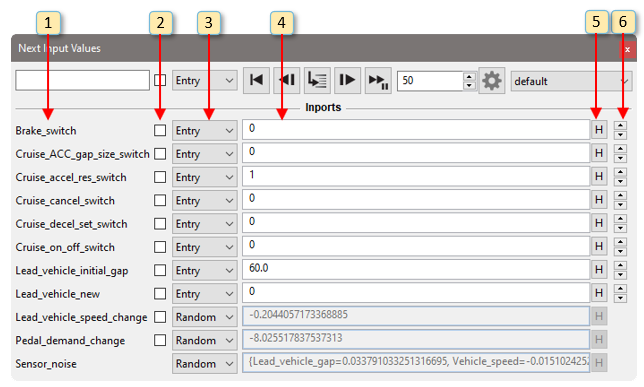

Fig. 3.10 The Next Input Values dialog enables you to hand-select values for harness inputs at each simulation step. Values for configuration variables can be specified at the start of a test.#

Upon selecting this input mode, a Next Input Values dialog appears (as

shown in Figure 3.10). This dialog lets you

to specify the input values for the next simulation step and see the values

computed for outputs and test points. Initially, each harness inport of the

model has a row in the dialog. You can remove inputs from the dialog or add

outputs, test points, and configuration variables by clicking the gear

button ( ) in the toolbar of the Next Input Values

dialog. The row for each inport has six columns that determine the next

input value for the corresponding inport as follows.

) in the toolbar of the Next Input Values

dialog. The row for each inport has six columns that determine the next

input value for the corresponding inport as follows.

The name of the item (inport, outport, test point, or configuration variable).

This checkbox toggles whether the item is included in a scope displaying a subset of the signals from the Next Input Values dialog.

This pull-down menu has several entries that determine how the next value for the inport is specified:

- Random

Randomly select the next value for the inport from the type given for the inport in the

.rsifile.- Entry

Specify the next value with the text-entry box in column four of the panel.

- Min

Use the minimum value allowed by the inport’s type constraint.

- Max

Use the maximum value allowed by the inport’s type constraint.

- Test

Read data from an existing test suite.

If the pull-down menu in column three is set to Entry, then the next input value is taken from this text-entry box. The entry can be a concrete value (e.g. integer or floating point constant) or a simple expression that is evaluated to compute the next value. These expressions can reference the previous values of inputs or the simulation time. For example, a ramp can be specified by

pre(Pedal_demand_change) + 0.0001or a sine wave can be generated bysin(t) * 0.001. For the full description of the expression notation see User Input Mode.If the pull-down menu in column three is set to “Entry”, then clicking the history button (labeled H) displays recent values the inport has assumed. Selecting a value from the list causes it to be placed in the text-entry box of column four.

The arrow buttons in this column enable you to scroll through the possible values for the port. The arrows are not available for ports of type double or single or ranges with a base type of double or single.

When Run Fast Simulation ( ) is selected, the

inport values specified are used for each subsequent simulation step until

the simulation is paused. The toolbar at the top of the Next Input Values

dialog includes buttons for stepping (mini-step, single-step, fast

simulation, reverse step, etc.) that work the same as those in the

top-level Simulator window. The text entry box to the left of the toolbar

lets you enter a search string to cause Reactis to display only those items

whose name contains the search string.

) is selected, the

inport values specified are used for each subsequent simulation step until

the simulation is paused. The toolbar at the top of the Next Input Values

dialog includes buttons for stepping (mini-step, single-step, fast

simulation, reverse step, etc.) that work the same as those in the

top-level Simulator window. The text entry box to the left of the toolbar

lets you enter a search string to cause Reactis to display only those items

whose name contains the search string.

3.4.6. Constructing a Functional Test with User Guided Simulation#

One use case for user guided simulation is for constructing functional tests. A test to ensure that the adaptive cruise control is enabled and disabled as expected can be created as follows.

Step 19

In the following “Take a step” means click  in the

toolbar of the Next Input Values dialog. Take n steps means click

in the

toolbar of the Next Input Values dialog. Take n steps means click

n times.

n times.

If the Next Input Values dialog is not still open from the prior step, reopen it by selecting User Guided Simulation from the Source-of-Inputs dialog.

Click

to reset to the start state.

to reset to the start state.In the Next Input Values toolbar, click the

button to open the Select Signals dialog.

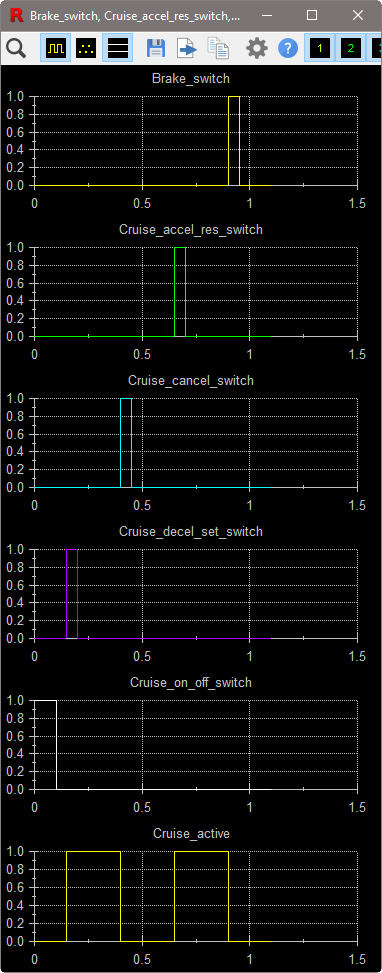

button to open the Select Signals dialog.In the Input Ports tab that opens, click the header Entry to select that radio button for all inputs. For each of the last four inputs (

Pedal_demand_change,Sensor_noise,Lead_vehicle_speed_change, andLead_vehicle_initial_gap) uncheck the Panel checkbox. ForLead_vehicle_initial_gap,Pedal_demand_changeandSensor_noise, change the radio button to Random.Select the Output Ports tab, then check the Panel checkbox for

Cruise_active.Select the Configuration Variables tab, then for

INITIAL_VEHICLE_SPEEDselect Entry and enter a value of30.0. Click Ok.In the Next Input Values dialog, to the right of each item name is a checkbox which you can toggle to include or exclude the item from a scope plotting the signals in the dialog. Check the box for the following items

Brake_switch,Cruise_accel_res_switch,Cruise_cancel_switch,Cruise_decel_set_switch,Cruise_on_off_switch, andCruise_active. This will help you visualize the test as you construct it. In particular, the bottom plot forCruise_activewill be inspected to check that the model is properly enabling and disabling the cruise control. Figure 3.11 shows what the scope will look like when you complete construction of the test.In the entry box for

Cruise_on_off_switchenter1.0. For all other inputs enter0.0. The arrows to the far right can be used to toggle between0.0and1.0. Take one step.Change the entry for

Cruise_on_off_switchto0.0, then take one step.Set

Cruise_decel_set_switchto 1.0 (indicating we want to activate the cruise control) and take a step. ChangeCruise_decel_set_switchback to0.0and take four steps. The scope forCruise_decel_set_switchshows it going from0.0to1.0, then back to0.0.Cruise_activebecomes1.0indicating the cruise control is enabled.Change

Cruise_cancel_switchto1.0and take a step, then change the input back to0.0and take four steps.Cruise_activeis0.0.Change

Cruise_accel_res_switchto1.0and take a step. Change it back to0.0and take four steps.Cruise_activeis1.0.Change

Brake_switchto1.0and take a step. Change it back to0.0and take four steps.Cruise_activeis0.0.In the main Reactis window, select Test Suite > Add/Extend Test, then Test Suite > Save. This adds the newly constructed test to the current test suite and then saves the test suite. Now, whenever this test is run in Reactis Simulator, Reactis will check that the value computed by the model for

Cruise_active, matches the value stored in the test suite (which you just checked manually).

Fig. 3.11 This scope shows a functional test constructed with user guided simulation that checks that the cruise control is enabled and disabled as expected.#

3.4.7. Other Simulator Features#

Simulator has several other noteworthy features. You may step both forward and backward through a simulation using toolbar buttons:

The

button executes a single execution step.

button executes a single execution step.The

button executes the next “mini-step” (a

block or statement at a time).

button executes the next “mini-step” (a

block or statement at a time).The

button causes the simulation to go back

(undo) a single step.

button causes the simulation to go back

(undo) a single step.The

button causes the simulation to go back

multiple steps.

button causes the simulation to go back

multiple steps.If using the Reactis for C Plugin with a model that includes C code, additional buttons appear for stepping through C code:

,

,  ,

,

,

,  ,

,

. See the Reactis for C

Plugin chapter for a description of how these

buttons work.

. See the Reactis for C

Plugin chapter for a description of how these

buttons work.

You may specify the number of steps taken when  ,

,

, or

, or  are pressed by

adjusting the number in the text-entry box to the right of

are pressed by

adjusting the number in the text-entry box to the right of

.

.

When a simulation is paused at the end of a simulation step (as opposed to in the middle of a simulation step), the current simulation run may be added to the current test suite by selecting the menu item Test Suite > Add/Extend Test. After the test is added it appears in the Source-of-Inputs dialog. After saving the test suite with Test Suite > Save, the steps in the new test may be viewed by selecting Test Suite > Browse. A model (or portion thereof, including coverage information) may be printed by selecting File > Print….

Breakpoints may be set by either:

right-clicking on a subsystem or state in the hierarchy panel and selecting Toggle Breakpoint; or

right-clicking on a Simulink block, Stateflow state, or Stateflow transition in the main panel and selecting Toggle Breakpoint. Additionally, if you are using the Reactis for EML Plugin or the Reactis for C Plugin, then a breakpoint may be set on any line of C/EML code that contains a statement by right-clicking just to the right of the line number and selecting Toggle Breakpoint.

The  symbol is drawn on a model item when a

break point is set. During a simulation run, whenever a breakpoint is hit,

Simulator pauses immediately.

symbol is drawn on a model item when a

break point is set. During a simulation run, whenever a breakpoint is hit,

Simulator pauses immediately.

3.5. Tester#

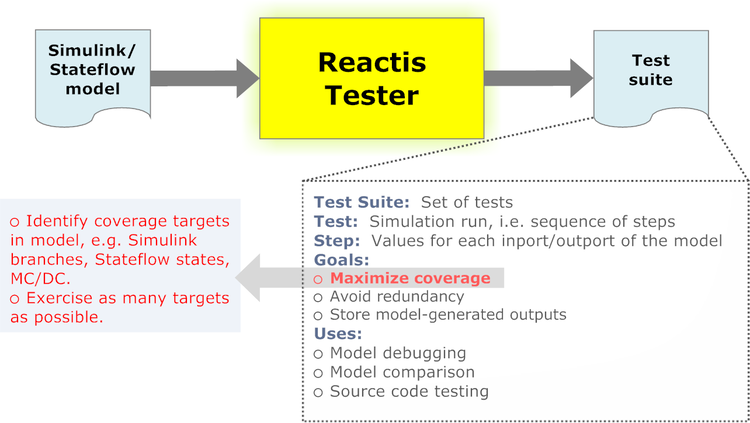

Tester may be used to generate a test suite (a set of tests) automatically from a Simulink / Stateflow model as shown in Figure 3.12. The tool identifies coverage targets in the model and aims to maximize the number of targets exercised by the generated tests.

Fig. 3.12 Reactis Tester takes a Simulink / Stateflow model as input and generates a test suite.#

Step 20

To start Tester, select Test Suite > Create.

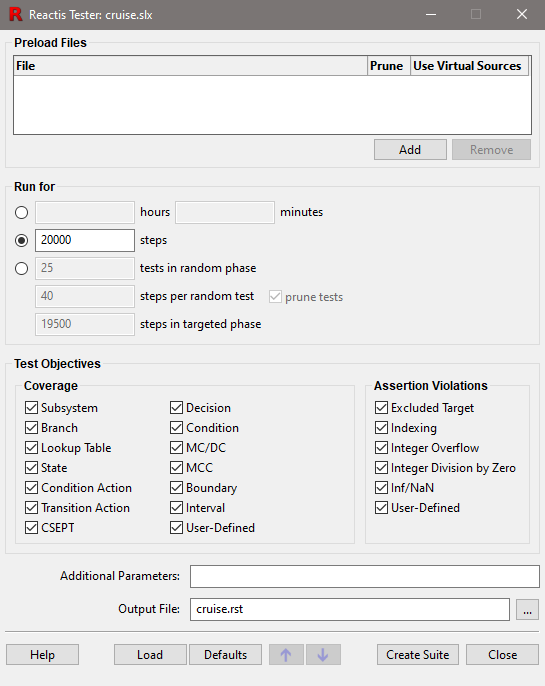

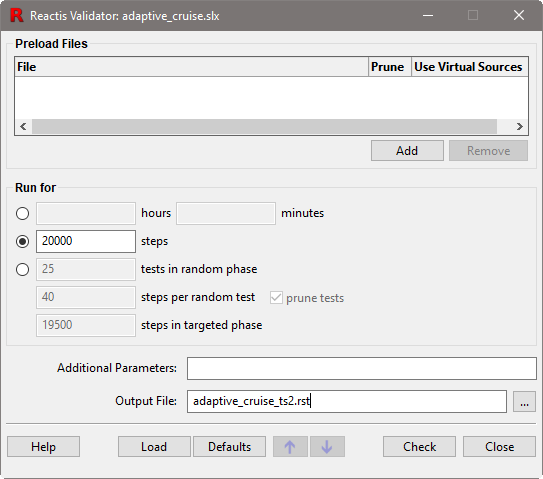

This causes the window shown in Figure 3.13 to appear. If you specify existing test suites in the Preload Files section, Tester will execute those suites, then add additional test steps that exercise targets not covered by the preloaded suite(s). The second section Run for determines how long Tester should run. The third section Test Objectives lists the coverage metrics and assertion categories which Tester will focus on. In the bottom section, you specify the name of the output file in which Tester will store the new test suite.

Fig. 3.13 The Reactis Tester launch dialog.#

There are three options provided in the Run for section. The default

option, as shown in Figure 3.13, is to

run Tester for 20,000 steps. Alternatively, you may choose to run Tester

for a fixed length of time by clicking on the top radio button in the Run

for section, after which the steps entry box will be disabled and the

hours and minutes entry boxes will be enabled. These values are

added together to determine the total length of time for which Tester will

run, so that entering a value of 1 for hours and 30 for minutes

will cause Tester to run for 90 minutes. See the Reactis

Tester chapter for usage details of the less-often

used third radio button in the Run for section.

The Test Objectives section contains check boxes which are used to select the kinds of coverage metrics and assertion categories Tester will focus on while generating tests. The Reactis Coverage Metrics chapter describes the different types of coverage tracked by Reactis.

Step 21

To generate a test suite,

Set Steps to

30000and Output file toadaptive_cruise_ts1.rst. For all other settings retain the default.Click the Create Suite button.

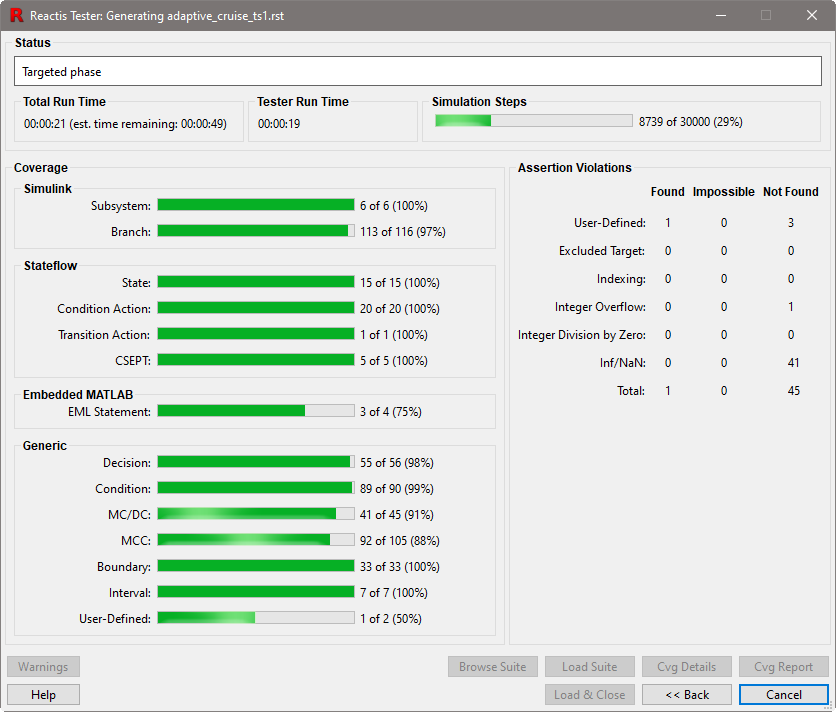

Fig. 3.14 The Reactis Tester progress dialog.#

The Tester progress dialog, shown in Figure 3.14, is displayed during test-suite

generation. When Tester terminates, a results dialog is shown, and a file

adaptive_cruise_ts1.rst containing the suite is produced. The results

dialog includes buttons for loading the new test suite into the Test-Suite

Browser (see below) or Reactis Simulator.

3.6. The Test-Suite Browser#

The Test-Suite Browser is used to view the test suites created by Reactis. It may be invoked from either the Tester results dialog or the Reactis top-level window.

Step 22

Select the Test Suite > Browse menu item and then

adaptive_cruise_ts1.rst from the file selection dialog that pops up.

Fig. 3.15 The Reactis Test-Suite Browser.#

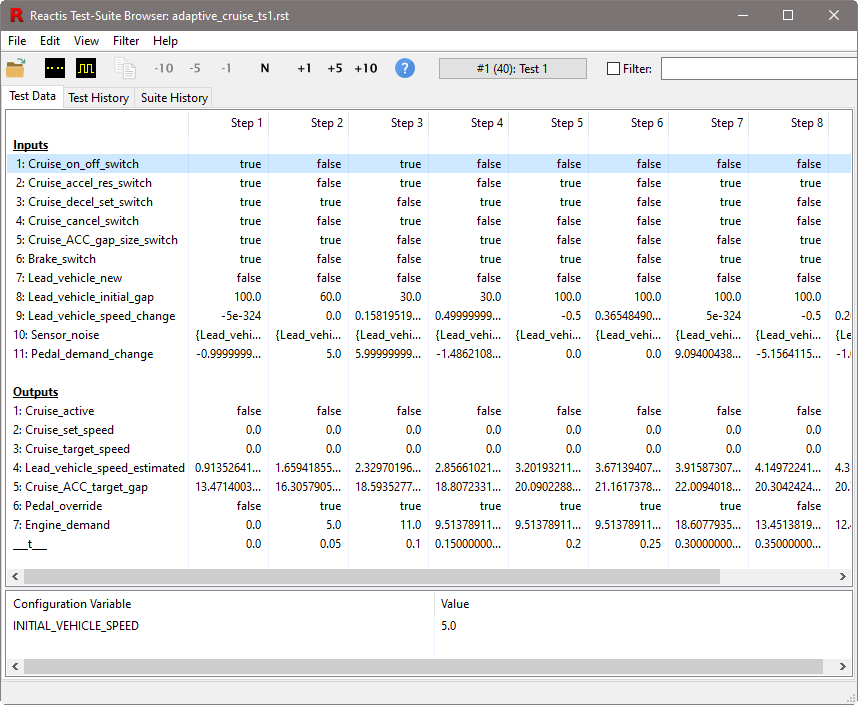

A Test-Suite Browser window like the one shown in Figure 12.1 is displayed. The Test Data tab of the

browser displays the test selected in the button/dialog located near the

center of the browser’s tool bar. The main panel in the browser window

shows the indicated test as a matrix, in which the first column gives the

names of input and output ports of the harness and each subsequent column

lists the values for each port for the corresponding simulation step. The

simulation time is displayed in the output row labeled ___t___. The

buttons on the tool bar may be used to scroll forward and backward in the

test. The Test History and Suite History tabs display history

information recorded by Reactis for the test and the suite as a whole.

The Filter entry box on the right side of the toolbar lets you search for

test steps that satisfy a given condition. You enter a boolean expression

in the search box and then select the filter check box to search for test

steps in the suite for which the expression evaluates to true. For example,

to see each step where the cruise control is active enter Cruise_active == true

and then select the Filter check box.

The Test-Suite Browser may also be used to display the entire set of values passing over a port during a single test or set of tests.

Step 23

Perform the following in the Test-Suite Browser window.

Click on the row for inport

Pedal_demand_change.Right-click on the row and select Open Distribution Scope (current test).

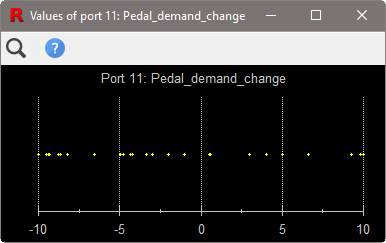

A dialog similar to that shown in Figure 3.16 appears. In the figure, each value assumed by the inport

Pedal_demand_change is represented by a yellow dot.

Fig. 3.16 Values passing over inport Pedal_demand_change during a test.#

3.7. Validator#

Reactis Validator is used for checking whether models behave correctly. It enables the early detection of design errors and inconsistencies and reduces the effort required for design reviews. The tool gives you three major capabilities.

- Assertion checking.

You can instrument your models with assertions, which monitor model behavior for erroneous scenarios. The instrumentation is maintained by Reactis; you need not alter your

.slxfile. If Validator detects an assertion violation, it returns a test highlighting the problem. This test may be executed in Reactis Simulator to uncover the source of the error.- Test scenario specifications.

You can also instrument your models with user-defined targets, which may be used to define test scenarios to be considered in the analysis performed by Tester and Validator. Like assertions, user-defined targets also monitor model behavior; however, their purpose is to determine when a desired test case has been constructed (and to guide Reactis to construct it), so that the test may be included in a test suite.

- Concrete test scenarios.

Finally, you can place virtual sources at the top level of a model to control one or more harness inports as a model executes in Reactis. That is, you can specify a sequence of values to be consumed by an inport during simulation or test-generation. Virtual sources can be easily enabled and disabled. When enabled, the virtual source controls a set of inports and while disabled those inports are treated by Reactis just as normal top-level inports.

Conceptually, assertions may be seen as checking system behavior for potential errors, user-defined targets monitor system behavior in order to check for the presence of certain desirable executions (tests), and virtual sources generate sequences of values to be fed into model inports. Syntactically, Validator assertions, user-defined targets, and virtual sources have the same form and are collectively referred to as Validator objectives.

Validator objectives play key roles in checking a model against requirements given for its behavior. A typical requirements specification consists of a list of mandatory properties. For example, a requirements document for an automotive cruise control might stipulate that, among other things, whenever the brake pedal is depressed, the cruise control should disengage. Checking whether or not such a requirement holds of a model would require a method for monitoring system behavior to check this property, together with a collection of tests that would, among other things, engage the cruise control and then apply the brake. In Validator, the behavior monitor would be implemented as an assertion, while the test scenario involving engaging the cruise control and then stepping on the brake would be captured as a user-defined target.

3.7.1. Manipulating Validator Objectives#

This section gives more information about Validator objectives.

Step 24

Do the following.

Disable Simulator by clicking the

button in

the top-level toolbar. (Validator objectives may only be modified or

inserted when Simulator is disabled.)

button in

the top-level toolbar. (Validator objectives may only be modified or

inserted when Simulator is disabled.)In the model hierarchy panel, select the top-level of the model

adaptive_cruisefor display in the main window.

After performing these operations, you now see a window like the one

depicted in Figure 3.17. The three

kinds of objectives are represented by different icons; assertions are

denoted by a lightning bolt ![]() , targets are

marked by a cross-hair symbol

, targets are

marked by a cross-hair symbol ![]() , and virtual

sources are represented by

, and virtual

sources are represented by ![]() .

.

Fig. 3.17 The top-level Reactis window, with Validator objectives.#

Validator objectives may take one of two forms.

- Expressions.

Validator supports a simple expression language for defining assertions, user-defined targets, and virtual sources.

- Simulink / Stateflow observer diagrams.

Simulink / Stateflow diagrams may also be used to define objectives. Such a Simulink subsystem defines a set of objectives, one for each outport of the subsystem. For assertion diagrams, a violation is flagged by a value of zero on an outport. For user-defined targets, coverage is indicated by the presence of a non-zero value on an outport. For virtual sources, each outport controls an inport of the model.

Step 25

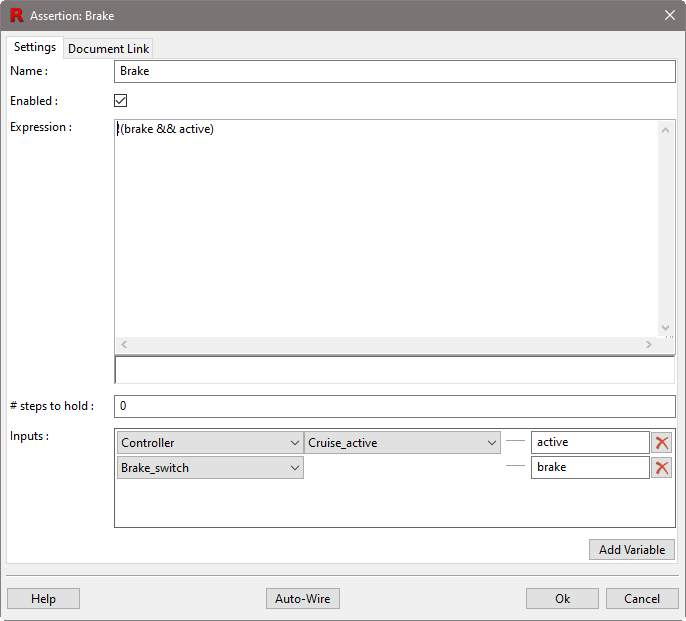

To see an example expression objective, right- click on the Brake

assertion and select Edit Properties. When through with this step,

click Cancel to dismiss the dialog.

Fig. 3.18 The dialog for adding or modifying a Validator expression objective.#

A dialog like that shown in Figure 3.18 now appears. This dialog shows an expression intended to capture the following requirement for a the cruise control: “Activating the brake shall immediately cause the cruise control to become inactive.” Note that the dialog consists of five parts:

Name. This name labels the assertion in the model diagram.

Enabled. This check box enables and disables the expression.

Expression. This is a C-like boolean expression. The interpretation of such an assertion is that it should always remain “true”. If it becomes false, then an error has occurred and should be flagged. In this case, the expression evaluates to “true” provided that at least one of

brakeandactiveis false (i.e. the conjunction of the two is false). The section named The Simulink Expression Objective Dialog contains more detail on the expression notation.# steps to hold. For assertions, this entry is an integer value that specifies the number of simulation steps that the expression must remain false before flagging an error. For user-defined targets, the entry specifies the number of steps that the expression must remain true before the target is considered covered.

Inputs. The entry boxes in the right column of this section list the variables used to construct the expression. These can be viewed as virtual input ports to the expression objective. The pull-down menus to the left of the section specify which data items from the model feed into the virtual inputs. Note that, although you can manually specify the inputs and wiring within this dialog, it is simpler to:

Leave this section blank when creating an objective.

After clicking Ok to dismiss the dialog, drag and drop signals from the main Reactis panel onto the objective.

Step 26

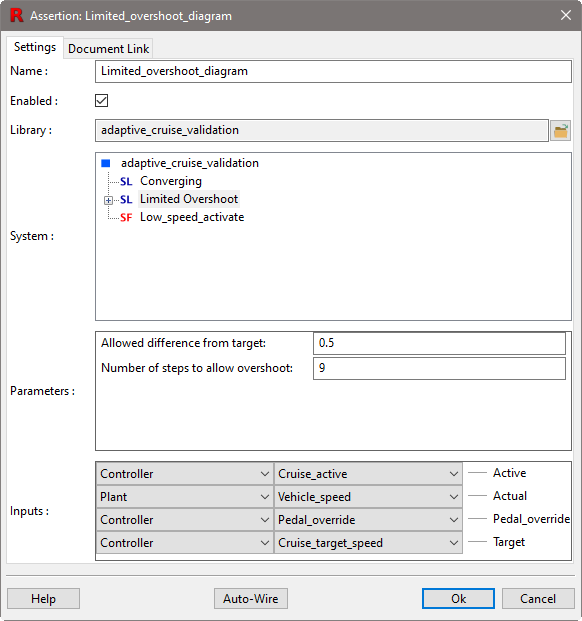

To see an example diagram objective, in the main panel, right-click on the

assertion Limited_overshoot_diagram and select Edit Properties.

Fig. 3.19 The Properties dialog for a diagram objective (the assertion Limited_overshoot_diagram).#

A dialog like the one in Figure 3.19 now appears. This dialog contains five sections.

Name. The name of the objective.

Enabled. This check box enables and disables the objective.

Library. The Simulink library containing the diagram for the objective. In the case of

Limited_overshoot_diagram, the assertion resides inadaptive_cruise_validation.slx.System. The system in the library that is to be used as the objective. In this case, the system to be used is

Limited Overshoot.Parameters. If the subsystem selected for use as an objective is a masked subsystem, then this panel is used to enter the relevant parameters.

Inputs. The wiring panel is used to indicate which data items in the model should be connected to the inputs of the objective. In this case,

Limited_overshot_diagramhas four inputs:Active,Actual,Pedal_override, andTarget. The wiring information indicates that the first input should be connected to theCruise_activeoutput of theControllersubsystem. The remaining inputs are wired similarly. In general, this panel contains pull-downs describing all valid data items in the current scope to which inputs of the objective may be connected.

Note, that, although you can manually specify the wiring within this dialog, it is simpler to:

Leave the wiring unspecified after inserting an objective.

After clicking

Okto dismiss the dialog, drag and drop signals from the main Reactis panel onto the objective.

Wiring information may be viewed by hovering over a diagram objective in the main Reactis panel.

Diagram-based objectives may be viewed as monitors that read values from the model being observed and raise flags by setting their outport values appropriately (zero for false, non-zero for true). A diagram-based assertion defines one check for each of its outports. A check is violated if it ever assumes the value zero. Similarly, a diagram-based target objective defines one target for each outport. Such a target is deemed covered if it becomes non-zero.

Step 27

To view the diagram associated with Limited_overshoot_diagram:

If still open, close the properties dialog for

Limited_overshot_diagramby clicking the Cancel button at the bottom of dialog box.Double-click on the

Limited_overshoot_diagramassertion icon in the main panel.

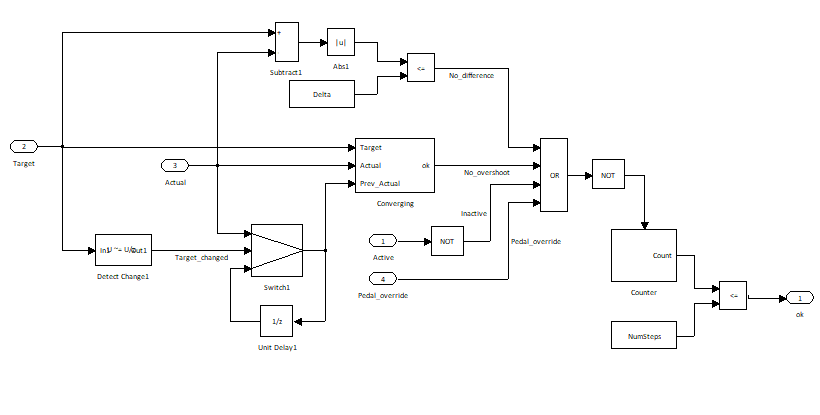

These operations display the Simulink diagram shown in Figure 3.20. This diagram encodes the requirement that when the cruise control is engaged the vehicle speed converges nicely to the target speed.

Fig. 3.20 A Validator diagram assertion encoding a requirement that, when engaged, the cruise control causes the vehicle speed to converge to the target speed.#

Diagram objectives give you the full power of Simulink / Stateflow to

formulate assertions and targets. The objectives may use any Simulink

blocks supported by Reactis, including full Stateflow. The diagrams are

created using Simulink and Stateflow in the same way standard models are

built; they are stored in a separate .slx file from the model under

validation.

Diagram wiring is managed by Reactis, so the model under validation need

not be modified at all. As this information is stored in the .rsi file

associated with the model, it also persists from one Reactis session to the

next. After adding a diagram objective to a model, the diagram is included

in the model’s hierarchy tree, just as library links are. See the

Reactis Validator Chapter for more details.

3.7.2. Launching Validator#

Step 28

To use Validator to check for assertion violations:

Select the Validate > Check Assertions… menu entry.

Fig. 3.21 Launching Validator brings up this screen.#

A dialog like the one in Figure 3.21 now appears. The dialog is similar to the Tester launch dialog because the algorithms underlying the two tools are very similar. Conceptually, Validator works by generating thorough test suites from the instrumented model using the Tester test-generation algorithm and then applying these tests to the instrumented model to see if any violations occur. Note that when a model is instrumented with Validator objectives, the test-generation algorithm uses the objectives to direct the test construction process. In other words, Reactis actively attempts to find tests that violate assertions and cover user-defined targets. Validator stores the test suite it creates in the file specified in this dialog. The tests may then be used to diagnose any assertion violations that arise.

Note that if a model is instrumented with Validator objectives Reactis Tester also aims to violate the assertions and cover the user-defined targets.