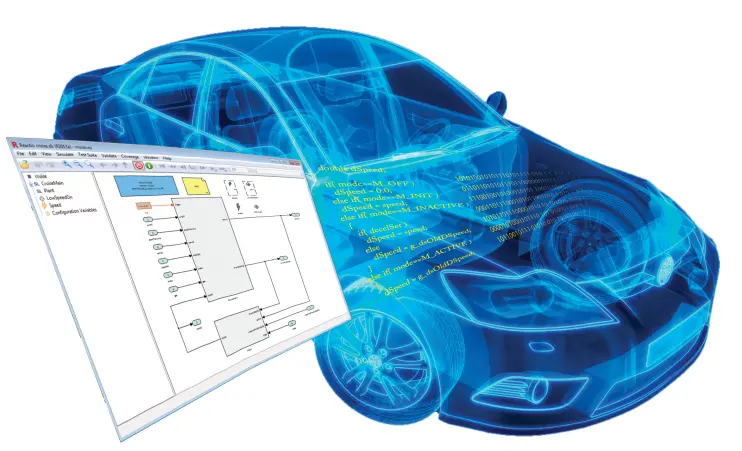

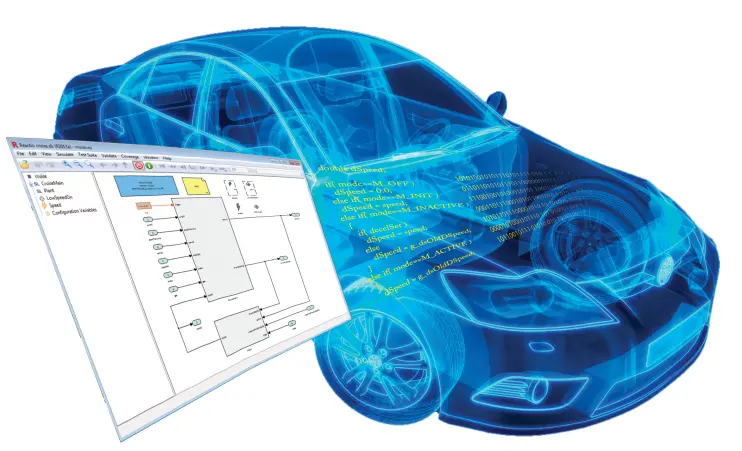

Reactis® Testing Tools for Simulink® and C Code

Find bugs, fix bugs, deploy more robust software faster

Find bugs, fix bugs, deploy more robust software faster

| Product | Description |

|---|---|

| Reactis for Simulink | Test, Simulate, Validate Simulink/Stateflow® models |

| Reactis for EML Plugin | Integrates with Reactis to support Embedded MATLAB® parts of models (MATLAB blocks, EML called from Stateflow) |

| Reactis for C Plugin | Integrates with Reactis to support C code portions of models (C Caller blocks, S-Functions and Stateflow custom code) |

| Reactis Model Inspector | Standalone, lightweight Simulink Viewer |

| Reactis for C | Test, Simulate, Validate C code (not contained in a model) |

Will unexpected inputs cause a runtime error?

How much of my code have I really tested?

How do I understand, diagnose, and fix a bug?

Will the brake always deactivate the cruise control?

How do I test my code against the model?

TÜV SÜD has certified that Reactis is qualified for safety-related

development according to ISO 26262, up to ASIL D.

More About ISO 26262 Compliance →

Get started with Reactis today. Download a free trial or schedule a demo with our experts.