6. Use Cases#

6.1. Back-to-Back Testing#

When using model-based development within an ISO 26262 process, ISO 26262-6 sub-clauses 9.4.2 and 10.4.2 recommend performing back-to-back comparisons of a model and the implementation derived from the model. The goal of back-to-back testing is to determine that an implementation and model both produce the same outputs when given the same inputs. There are four essential requirements for back-to-back testing to be successful. First, there should be a high degree of confidence in the correctness of the model gained from prior testing of the model against its requirements. Second, the implementation should produce outputs which are reasonably close (small differences are likely due to rounding of results during numerical calculations) to the outputs of the model for all inputs. Third, the tests which were used to perform the comparison should achieve a high degree of coverage of the model and its requirements. Fourth, the tests should achieve a high degree of coverage of the implementation.

6.1.1. Back-to-Back Comparison Between Model and Code#

For applications which are implemented using the C language, Reactis for Simulink and Reactis for C can be used in tandem to support efficient back-to-back testing. Note that the C code itself may be coded by hand or automatically generated from the model using a tool such as Embedded Coder offered by MathWorks or TargetLink offered by dSPACE. In either case, the code should be compared to the model in a back-to-back test.

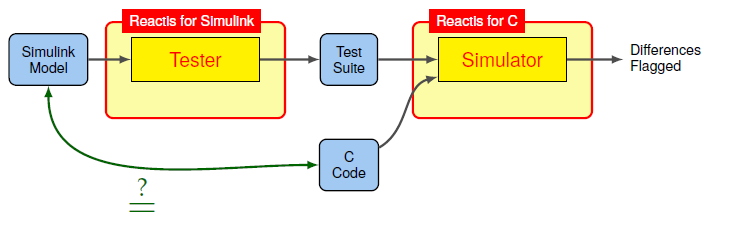

Fig. 6.1 Back-to-back model vs. code comparison using Reactis.#

Figure 6.1 depicts the workflow when using Reactis to perform back-to-back testing of a model and a C code implementation of the model. The testing process consists of three steps:

Generate a comprehensive test suite from the model using Reactis Tester (see Reactis User’s Guide: Tester).

Execute the test suite on the C code in Reactis for C using Simulator (see Reactis for C User’s Guide: Creating Test Execution Reports).

Any differences in behavior will be flagged.

6.1.2. Back-to-Back Comparison Between Old and New Versions of a Model (Regression Testing)#

As noted in ISO 26262-6 sub-clause 5.2, methods from agile software development, such as nightly regression testing, can be a helpful part of a 26262-based process. In the a model-based design process, a new version of a model can be tested against a prior version to screen for bugs that may have been introduced while revising the model.

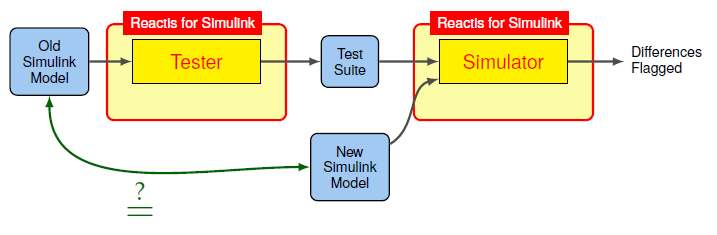

Fig. 6.2 Back-to-back regression testing of new vs. old model versions using Reactis.#

Regression testing of models can be done using Reactis, as shown in Figure 6.2. The testing process depicted in this figure consists of three major steps:

Generate a comprehensive test suite from the old model using Reactis Tester (see Reactis User’s Guide: Tester).

Run the generated tests on the new model in Reactis Simulator (see Reactis User’s Guide: Executing Test Suites Using Reactis Simulator).

Any differences in behavior will be flagged.

6.1.3. Back-to-Back Comparison Between a Model and a Compiled Executable#

Before software components can be incorporated into an automotive or other embedded application, they must be compiled into into an executable format (typically machine code of some sort) which will run on the target hardware. A back-to-back test which compares the behavior of the executable to the behavior of the model from which the executable was derived can serve as a useful check that the compiled code performs correctly. This check can be a part of the hardware-in-the-loop (HIL) testing recommended in sub-clause 11.4.1 of ISO 26262-6.

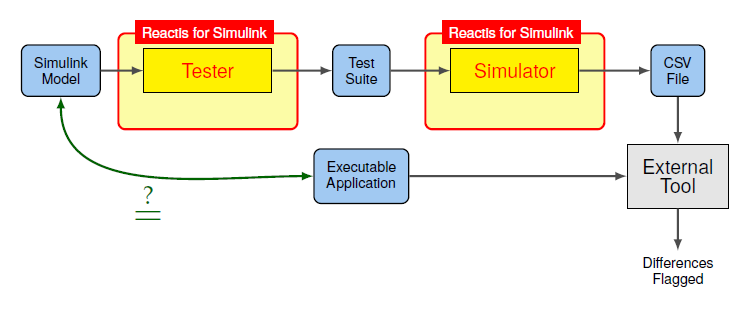

Fig. 6.3 Back-to-back testing of a model vs. executable using Reactis.#

Figure 6.3 depicts the workflow for performing back-to-back testing of a model vs. an executable with Reactis. The test process consists of four steps:

Generate a comprehensive test suite from the model using Reactis Tester (see Reactis User’s Guide: Tester).

Export the test suite to a CSV file suitable for import into an external tool (see Reactis User’s Guide: Exporting Test Suites).

In external tool, import the CSV test data and execute the tests on the compiled application (either in a host-based environment or on target).

Compare outputs stored in tests against those computed by the application.

6.2. Detecting and Eliminating Runtime Errors from Models or C Code#

Requirement 7.4.14 of ISO 26262-6 specifies simulation of the dynamic behavior of the design as a method for verification of the software architecture design. Additionally, in ISO 26262 sub-clause 8.4.5, design principles for software unit design and implementation are described that must be addressed at the source code level and unit level in order to achieve a certain level of robustness including methods to detect and eliminate runtime errors.

Reactis offers an easy way to perform a comprehensive simulation of a model (or C code) in order to detect runtime errors:

Generate test suite in Reactis Tester from a model or C code (see Reactis User’s Guide: Tester or Reactis for C User’s Guide: Tester).

Runtime errors detected during generation are flagged.

Diagnose and debug errors buy running generated tests in Reactis Simulator (see Reactis User’s Guide: Executing Test Suites Using Reactis Simulator or Reactis for C User’s Guide: Simulator).

Note: to maximize the chances of finding a runtime error, it is a good idea to generate a test suite which achieves a high level of coverage. Techniques for maximizing coverage are described in Reactis User’s Guide: Maximizing Coverage.

6.3. Measuring Coverage of Externally Generated Tests#

Requirement 9.4.4 of ISO 26262-6 mandates that the structural coverage achieved when executing tests be measured. Statement, Branch, and Modified Condition/Decision Coverage (MC/DC) are recommended for unit testing. These metrics (and others) are tracked in the model by Reactis for Simulink and within C code using Reactis for C. Requirement 10.4.4 of ISO 206262-6 recommends tests are measured according to function coverage and call coverage during integration testing. Reactis for C tracks both of these metrics. To measure the structural coverage of a set of tests created outside Reactis, do the following:

Import external tests from a CSV file (see Reactis User’s Guide: Importing Test Suites or Reactis for C User’s Guide: Importing Test Suites).

Execute tests in Reactis Simulator on model or C code to generate coverage report (see Reactis User’s Guide: Creating Test Execution Reports or Reactis for C User’s Guide: Creating Test Execution Reports).

If coverage does not reach 100%, Reactis will highlight the model/code so that uncovered targets can be easily identified. If the level of coverage is judged to be insufficient, additional testing can be performed (e.g., via user-constructed tests, which are described in Section 6.8, or a rationale based on other methods may be provided in accordance with requirement 9.4.4 of ISO 26262-6).

See requirement 9.4.3 of ISO 26262-6 for guidance on how to generate tests.

6.4. Checking for Runtime Errors in Externally Generated Tests#

Requirement 7.4.14 of ISO 26262-6 specifies simulation of the dynamic behavior of the design as a method for verification of the software architecture design. Additionally, in ISO 26262 sub-clause 8.4.5, design principles for software unit design and implementation are described that must be addressed at the source code level and unit level in order to achieve a certain level of robustness including methods to detect and eliminate runtime errors.

Reactis offers an easy way to perform a comprehensive simulation of a model (or C code) for a set of tests created outside Reactis in order to detect runtime errors:

Import external tests from a CSV file (see Reactis User’s Guide: Importing Test Suites or Reactis for C User’s Guide: Importing Test Suites).

Execute tests in Reactis Simulator on the model or C code (see Reactis User’s Guide: Executing Test Suites Using Reactis Simulator or Reactis for C User’s Guide: Creating Test Execution Reports).

6.5. Checking if Model or Code Satisfies Requirements#

Requirement 10.4.2 of ISO 26262-6 specifies the software integration shall be verified by a variety of methods, including Requirements-based testing, to provide evidence that the hierarchically integrated software units, the software components and the integrated embedded software achieve the specified functionality.

Reactis can be used to verify the model or C code against these requirements as follows:

Analyze system requirements. Express requirements in the form of Validator assertions and user-defined targets (see Reactis User’s Guide: Validator or Reactis for C User’s Guide: Validator).

Run Reactis Tester to search for scenarios that cover user-defined targets or detect assertion failures (see Reactis User’s Guide: Tester or Reactis for C User’s Guide: Tester).

Use Reactis Simulator to diagnose and debug any errors (see Reactis User’s Guide: Simulator or Reactis for C User’s Guide: Simulator).

Generate a report from the final test suite, which covers all user-defined targets and reports no assertion failures on model/code/executable to demonstrate that requirements are satisfied (see Reactis User’s Guide: Creating Test Execution Reports or Reactis for C User’s Guide: Creating Test Execution Reports.

6.6. Detect Dead Code in Model or C Code#

Requirement 9.4.4 of ISO 26262-6 specifies the structural coverage shall be measured at the software unit level, including analysis revealing shortcomings, including the discovery of dead code.

Reactis offers an easy way to detect dead code:

Generate a test suite for a model or C code in Reactis Tester (see Reactis User’s Guide: Tester or Reactis for C User’s Guide: Tester).

Execute test suite in Reactis Simulator (see Reactis User’s Guide: Simulator or Reactis for C User’s Guide: Simulator).

Examine targets flagged as unreachable.

6.7. Walkthroughs and Inspections#

Requirement 7.4.14 of ISO 26262-6 outlines how the software architectural design shall be verified using a combination of software architectural design verification methods, including Walkthroughs and Inspections. Requirement 9.4.2 of ISO 26262-6, outlines Walkthroughs and Inspections similarly, but at the software unit level.

Reactis supports walkthroughs and inspections with the following capabilities:

Hierarchical browsing of models, including Simulink, Stateflow, C code, and Embedded MATLAB.

Signal highlighting to help trace signals through a model.

Text search of a model (including C and EML code).

6.8. User-Constructed Tests#

Requirements outlined in clauses 9 (Software unit verification) and 10 (Software integration and verification) of ISO 26262-6 detail the verification of the software functional and safety requirements. Reactis Tester provides an automated, rigorous approach to generate test suites. In addition, Reactis offers the user a way to manually create and expand test suites in order to satisfy the safety requirements related to software verification.

The user-guided simulation feature of Reactis Simulator, lets a user either extend existing tests or create new tests interactively. As a model or code executes, the user can specify values for one or more inputs at each step in order to construct a desired testing scenario. The specific steps to do this are as follows:

Load model/code in Reactis Simulator (see Reactis User’s Guide: Simulator or Reactis for C User’s Guide: Simulator).

Load previously created test suite if desired. If a test is being extended, select and execute the test. (see Reactis User’s Guide: Test Input Mode or Reactis for C User’s Guide: Test Input Mode.

Enter user-guided simulation mode and execute simulation steps until test objectives are satisfied, then add the steps to the current test and save the test suite (see Reactis User’s Guide: User Input Mode or Reactis for C User’s Guide: User Input Mode).